Monitoring and Switching Over to Secondary Server

Monitoring the progress of the sync

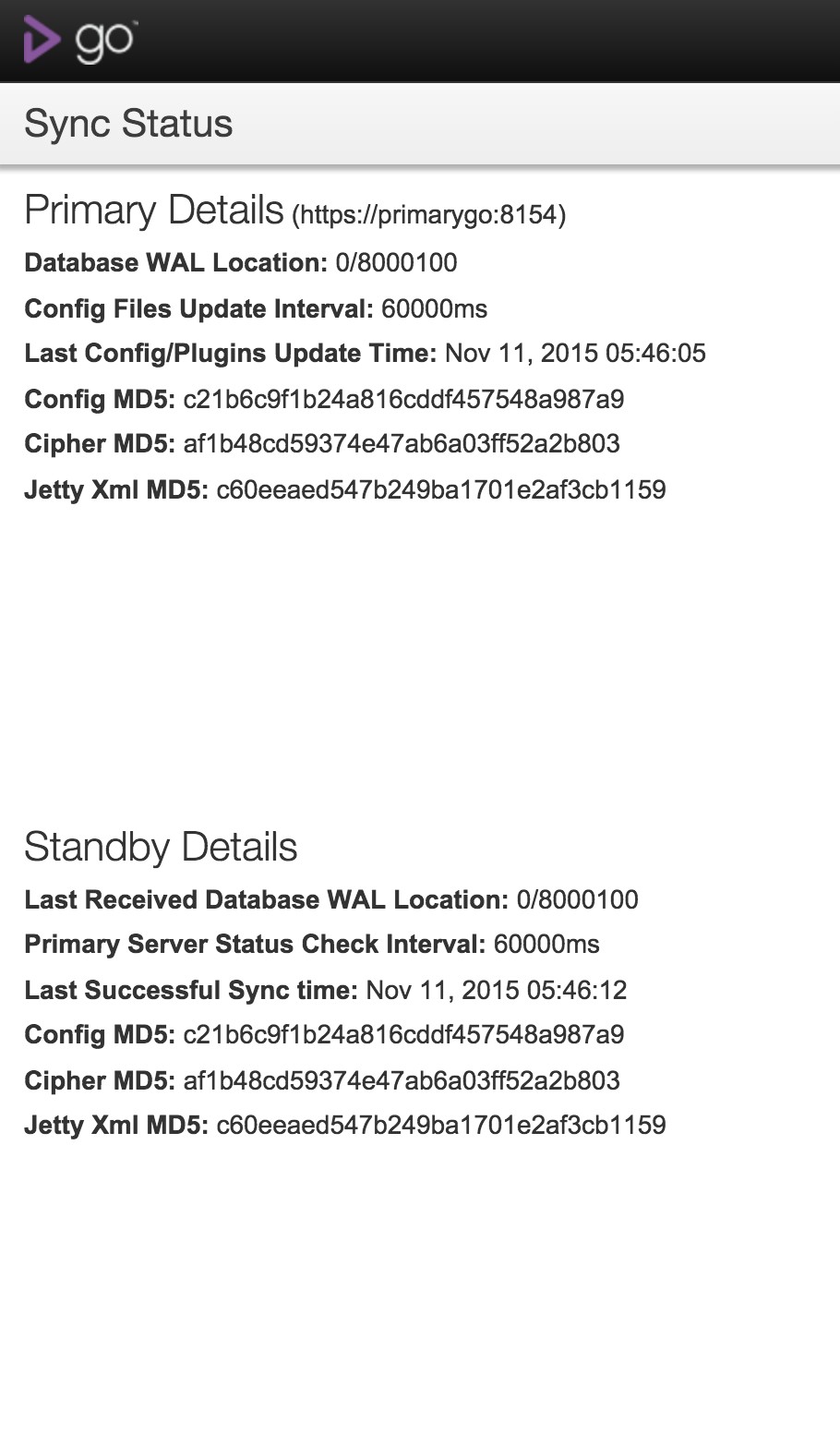

As mentioned in the details part of the “Setup a standby (secondary) GoCD Server” section, the standby dashboard shows the progress of the sync, and refreshes itself every few seconds. An entry showing up in red denotes the sync hasn’t happened, whereas an entry in black denotes that the standby is in sync with the server. You should monitor that the Last Config/Plugins Update Time under Primary Details and Last Successful Sync time under Standby Details are not off by a huge time gap.

If you need it, this information is also available via a JSON API:

http://standby-go-server:port/go/add-on/business-continuity/admin/dashboard.json

The standby GoCD Server dashboard looks like this:

Disaster strikes - What now?

Switch standby to primary

Suppose the primary GoCD Server goes down, you need to perform the following in order:

-

Turn off the primary instances

If the primary PostgreSQL Server and/or the primary GoCD Server are accessible, turn those services off on the corresponding machines.

-

Turn off PostgreSQL replication

The details part of the “Setup a standby PostgreSQL instance for replication” section mentions a

trigger_file, which is a file which allows the standby PostgreSQL instance to become the primary PostgreSQL instance. Create that file now. For instance:touch /path/to/postgresql.trigger.5432 -

Switch standby GoCD Server to primary

As mentioned in the “You need to know that …" section, the standby GoCD Server needs to be restarted before it can become the primary GoCD Server. While doing this, you need to set the

go.server.modesystem property to the valueprimary.-Dgo.server.mode=primaryThis property was originally mentioned in the details part of “Setup a standby (secondary) GoCD Server” section of the current document. You can also completely remove this property, since the default value is

primary. -

Switch virtual IP to point to standby GoCD Server

As mentioned in the details part of “Setup a virtual IP for the agents to use” section, you can now assign the virtual IP to the standby GoCD Server.

Note: However, if your primary GoCD Server is still up and has control over this virtual IP, assigning the virtual IP to the standby GoCD Server would fail. You will need to go to the primary GoCD Server and

unassignthe virtual IP from it. You’ll need to do this in case you need to switch because the primary PostgreSQL instance went down.Note: The console logs of jobs which are running will be lost, since those logs are not stored in the artifact store, till completion of the job. They’re stored locally on the GoCD Server.

Recovery - Back to the primary server

Given that you were able to successfully switch the erstwhile standby GoCD Server to become the primary, and the real primary GoCD Server is back in action, this section talks about what you need to do to get back to the original primary instances. The concern during this recovery is the syncing of the primary and standby PostgreSQL instances. The ancillary concerns are around syncing of config files. etc.

Please note that, at this time, this requires downtime. This might change in the future.

The steps are largely the same as that of setting up a standby GoCD Server and PostgreSQL instance.

For the purposes of this section:

- PG1: Original primary PostgreSQL instance

- PG2: Original standby PostgreSQL instance

- GO1: Original primary GoCD Server instance (connected to PG1)

- GO2: Original standby GoCD Server instance (connected to PG2)

The steps are:

-

Bring down both GoCD Servers, GO1 and GO2.

-

Unassign the virtual IP from the GO2 box. See the details part of the “Setup a virtual IP for the agents to use” section for more information about this.

-

Copy over the contents of

/etc/go(or at least/etc/go/cruise-config.xml) from GO2 to GO1. -

Use

pg_basebackupwith-X fetchflag to recreate the database on to PG1. This makes sure that all the changes made to the database during the time GO1 was down are brought back to it.pg_basebackup -h <ip_address_of_secondary_postgres_server> -U rep -D <empty_data_directory_on_primary> -X fetchNote: There have been cases where the

pg_basebackupcommand has hung with the default WAL (write-ahead logs)streambackup on an idle server. Therefore, we suggest to add-X fetchflag topg_basebackup. For further details, please refer to the wal_keep_segments and fetch flag (search for the fetch option value under -X) in the PostgreSQL documentation. -

In PG2, PostgreSQL would have changed the name of

recovery.conffile torecovery.done, to show that PG2 is now acting as primary. Rename that back torecovery.conf, remove the trigger file you created earlier (/path/to/postgresql.trigger.5432) and restart PostgreSQL on PG2. This makes sure that PG2 is running in standby mode. -

Start PG1. Since it does not have a

recovery.conffile, it will start as primary. -

Start GO1 now, and ensure that the

go.server.modeis either unset or set toprimary. -

Assign the virtual IP to the GO1 box. See the details part of the “Setup a virtual IP for the agents to use” section for more information about this.

If this is done often, or even if not, it is recommended to automate this process (with a manual start). Since it involves a possible four different boxes, and communication between them is quite system-specific, this is not mentioned as a part of this setup. However, it can be done quite easily and is recommended.